[en] June: Do AIs Dream of Generative Art?

One day we woke up and social media had been hijacked by AI. I shot a roll of Kodak on a medium format camera, then used only text prompts to recreate each frame. The process became an exploration of authenticity, creativity, and what happens when machines start making art.

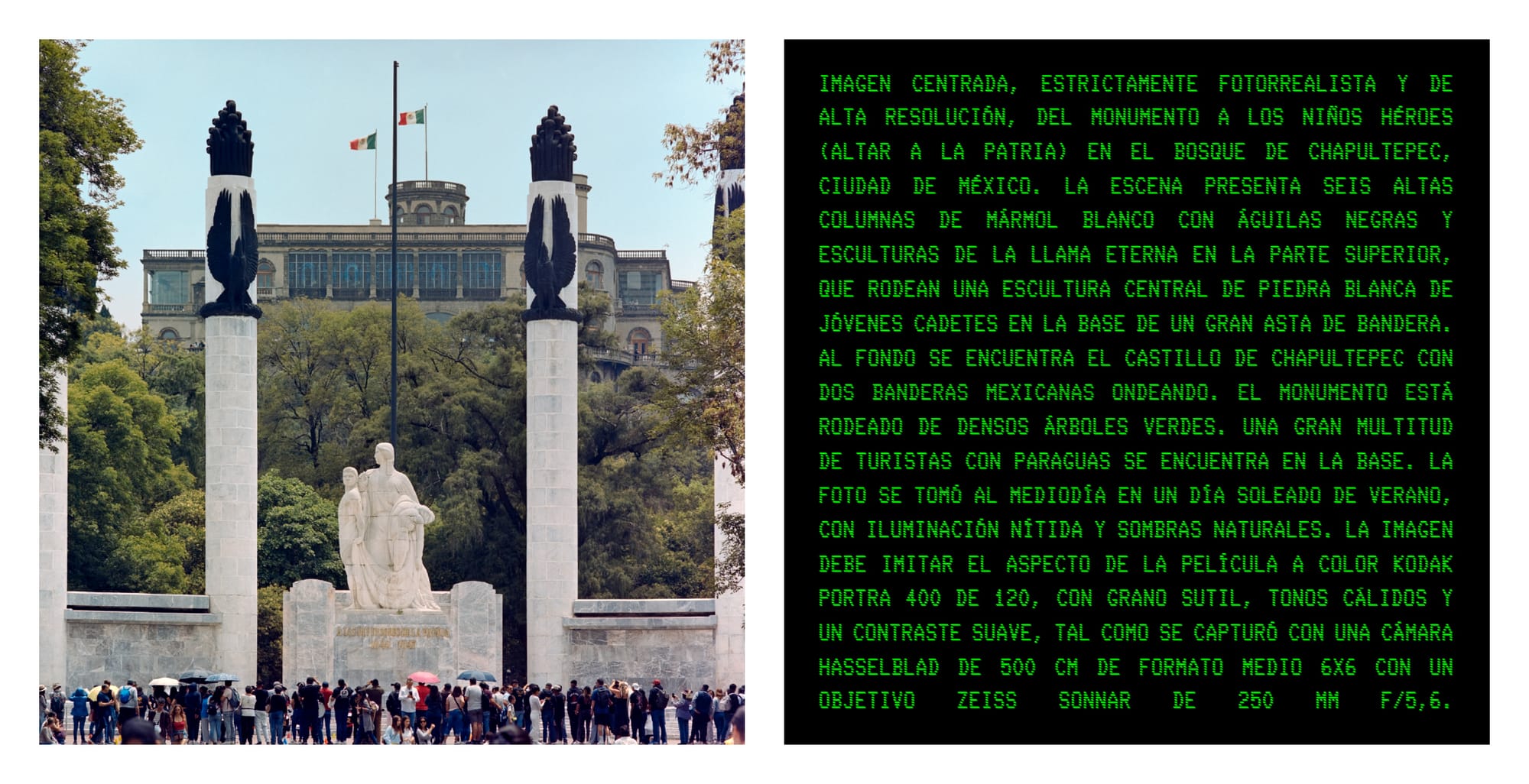

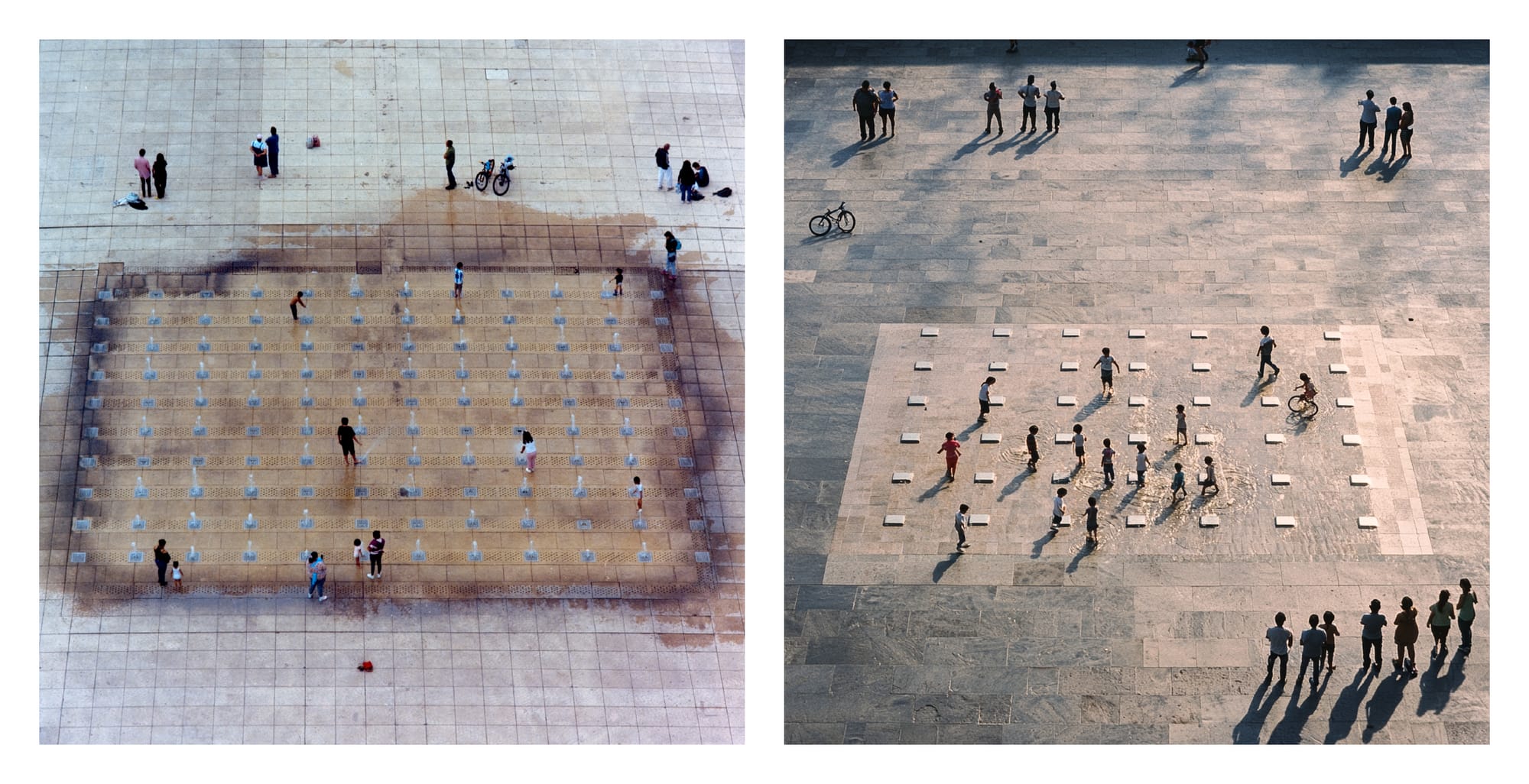

![[en] June: Do AIs Dream of Generative Art?](/content/images/size/w1200/2025/08/Frame---Monumento-Nin--os-Heroes---1.jpg)

Don't you feel like one day you just woke up and social media had been hijacked by AI? Suddenly, all your friends had Studio Ghibli-style portraits. Cute (yet slightly uncanny) videos of pets and babies performing impossible feats flooded your family's group chat. Then came the fake talk shows and interviews, each ending with a weird punchline or a glitchy twist. The tipping point had arrived: not gradually, as these things are supposed to happen, but all at once. With the back-to-back release of OpenAI's GPT Image 1 and Google's Veo 3, the future we were promised (but never asked for) landed squarely in our hands, demanding attention.

For years now, the media has oscillated between apocalyptic warnings and utopian promises about generative artificial intelligence. I have remained skeptical, even as I use AI-based products daily and watch artists like Rob Sheridan and Christian Hartmann create genuinely engaging work with these tools. The revolutionary potential feels real, but so does the hyperbole surrounding it. Still, faced with this sudden flood of synthetic content, I decided it was time to test these tools firsthand.

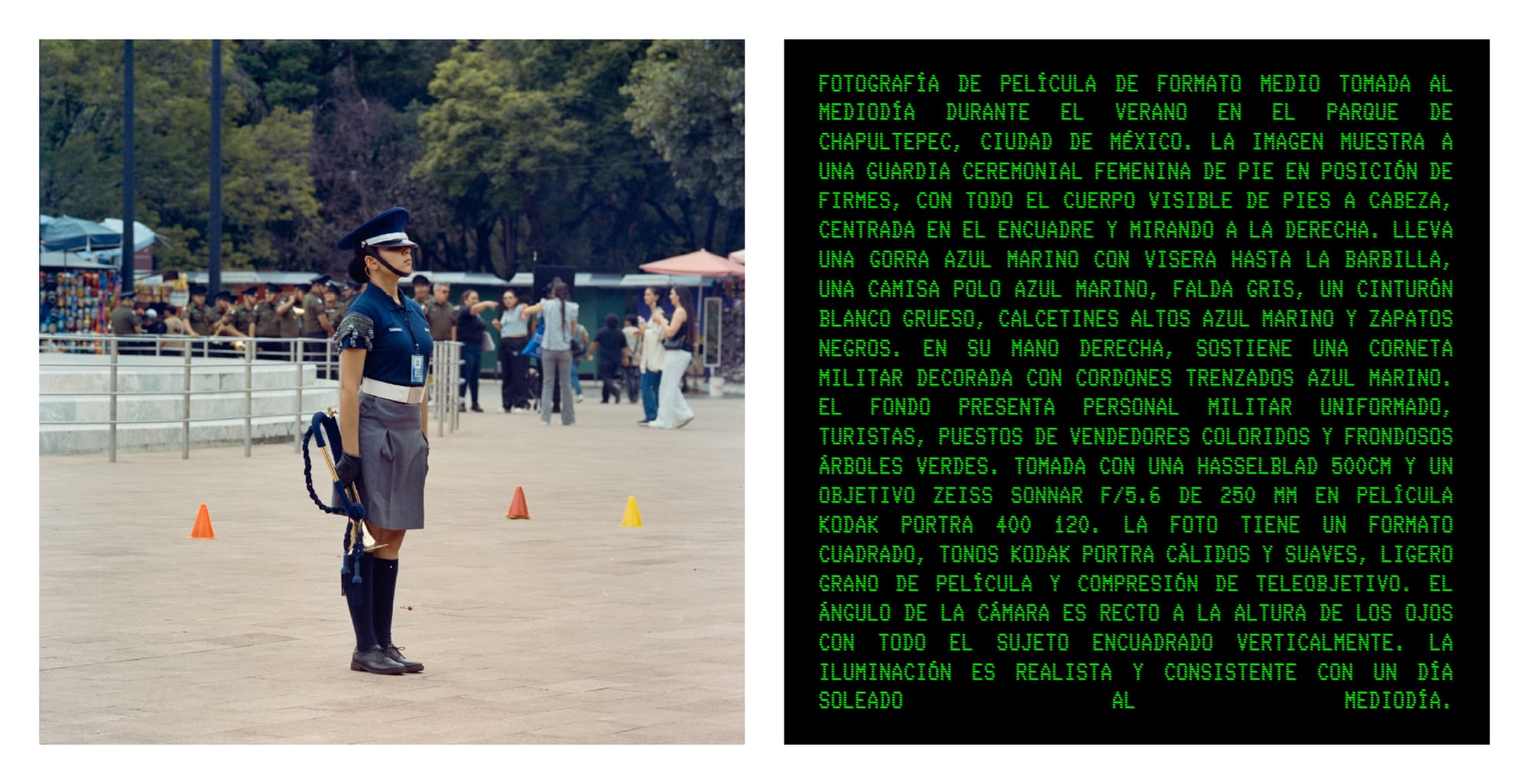

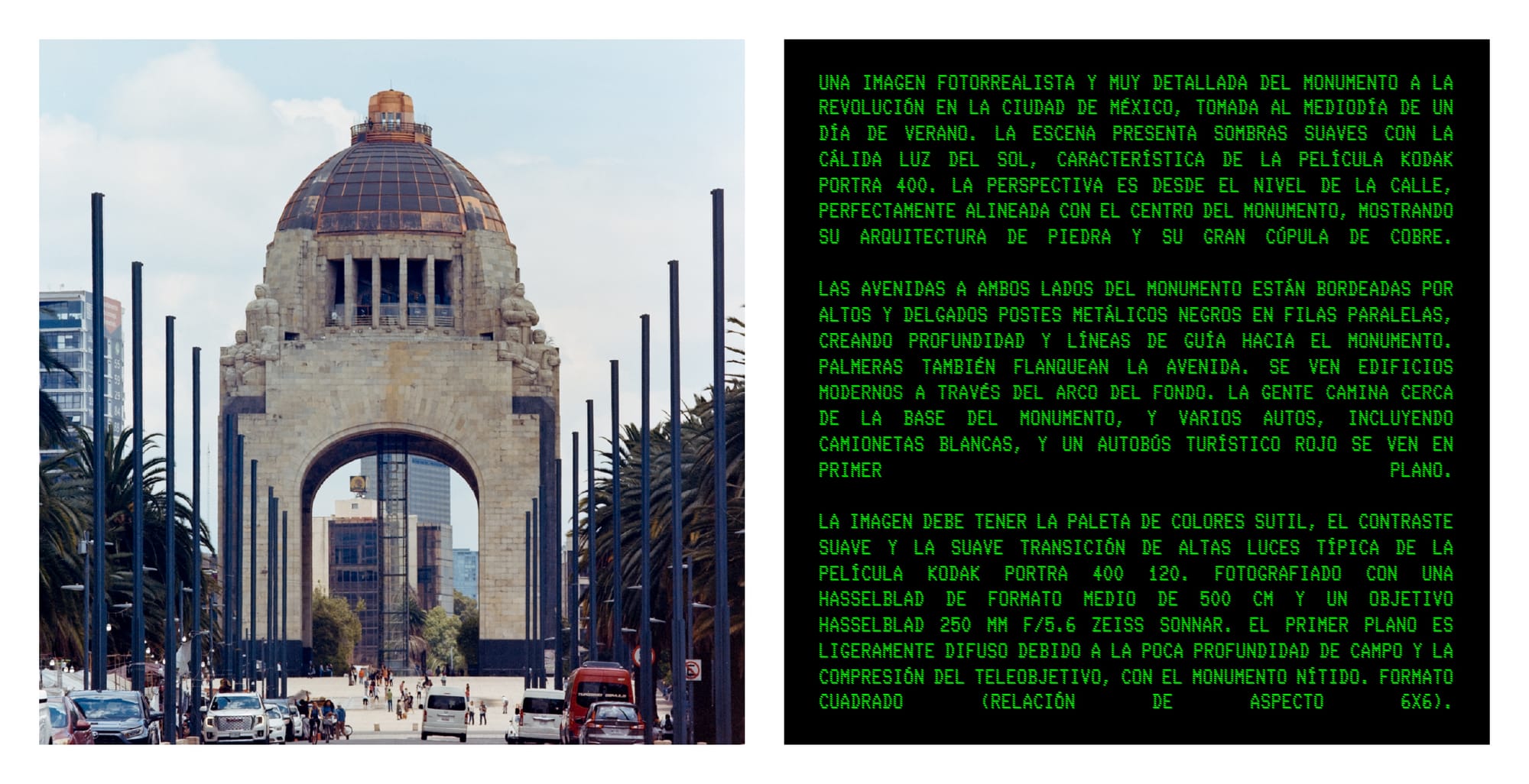

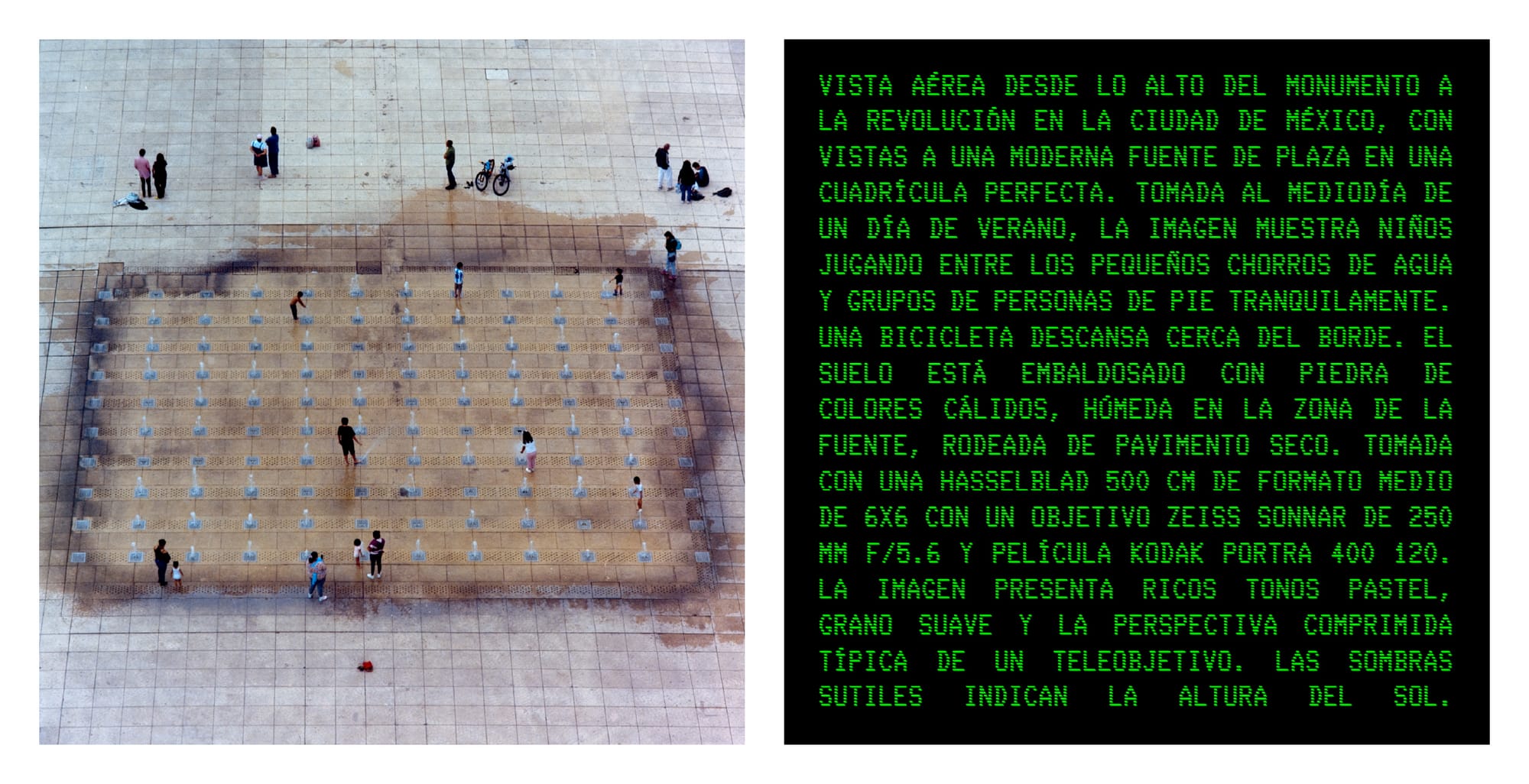

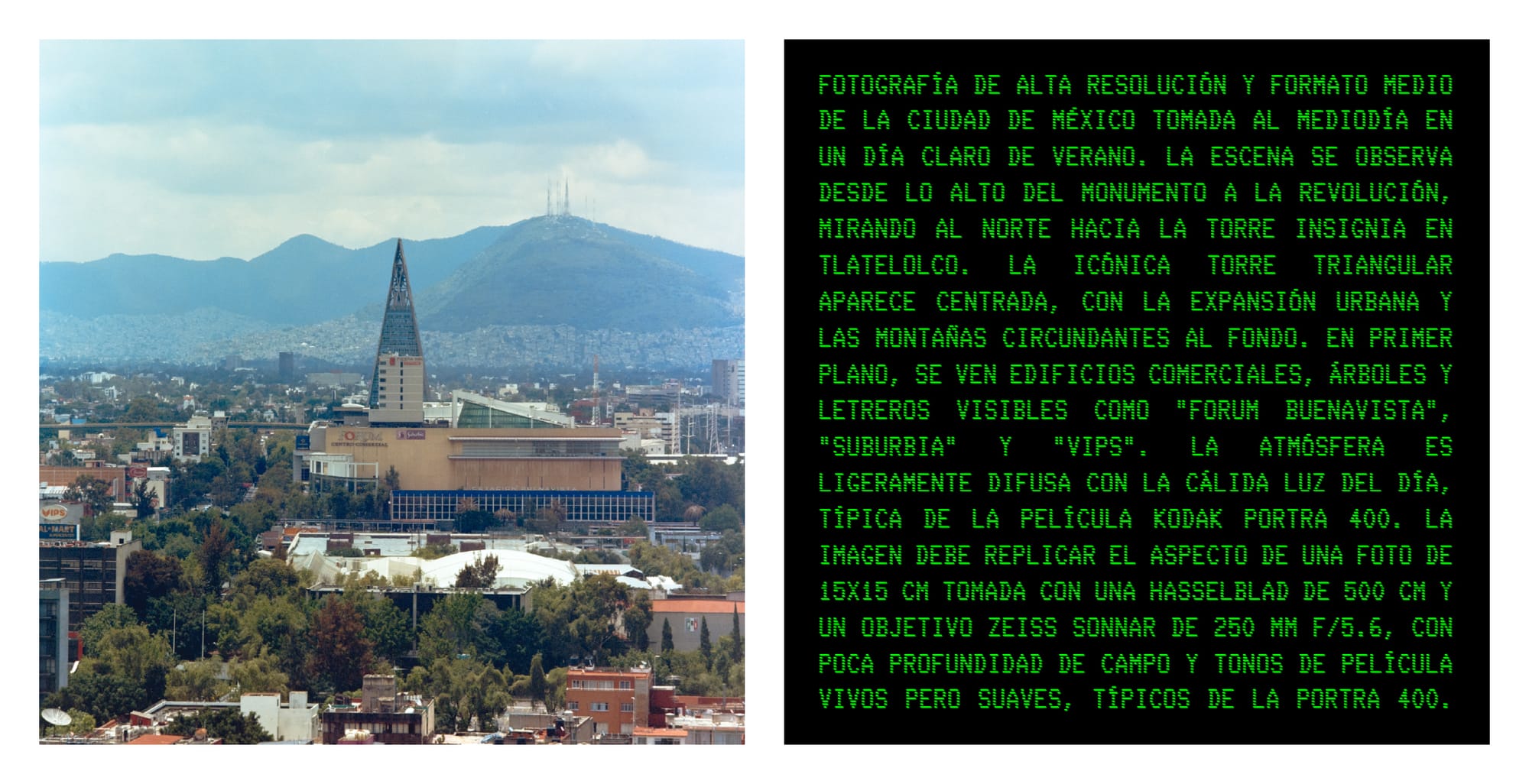

The experiment was straightforward: I would shoot a 120-roll of Kodak Portra 400 on a Hasselblad 500C/M, selected my favorite six of the twelve frames, and then used only text prompts to recreate them with AI; no shortcuts, no feeding in the originals, just descriptions of what I saw, letting the machine interpret my words without tailoring the photos to its capabilities.

The first attempts at recreating my photos revealed the distinct personalities of different AI systems. GPT Image 1 was precise and accurate, correctly placing elements and replicating real locations, but its results felt synthetic and lacked the soul of a true photograph. Midjourney, in contrast, delivered rich mood and film-like style, but often strayed from the original scenes, prioritizing its own artistic flair over faithful representation, especially when it came to reproducing actual buildings or landmarks: beautiful, but unpredictable.

The breakthrough came when I began combining the strengths of both systems: using GPT Image 1 to generate accurate compositions, then feeding those synthetic images into Midjourney with prompts crafted to evoke the film aesthetic I was after. Though my original photos never entered the process, the results began to echo what I had captured on film. This became a recursive process that reminded me of the control theory classes I took at university: a closed-loop system where outputs became inputs. The AI acted as both tool and collaborator in a continual cycle of refinement.

The results were simultaneously impressive and revealing. At first glance, the AI-generated images could be startlingly close to my originals. But a quick inspection always revealed the telltale signs of synthetic creation: missing details, impossible perspectives, textures that felt just slightly wrong. Convincing enough to fool a casual scroll through social media, but fundamentally lacking the complexity and authenticity of the real thing.

What surprised me most was how different the creative process felt. At first, generating images with a few words felt magical, but refining them to match a clear vision quickly became tedious and unpredictable—too much tweaking often made things worse, and achieving consistency was frustrating. Unlike photography, where the process itself brings me joy, even when the results are disappointing. Yet despite the frustration, I found myself drawn to it (especially with Midjourney). There’s something captivating about watching words become images, and when embraced for its surreal, otherworldly quality, AI can become a powerful, intoxicating tool for imagination.

This experiment didn't alter my relationship with photography; if anything, it deepened my appreciation for the irreplaceable qualities of the medium. But it did shift my perspective on AI's creative potential. These tools are more engaging than I expected: capable not just of approximation and synthesis, but occasionally of generating something that feels surprisingly evocative. Still, they remain tools, not substitutes. The AI revolution may be real, but it's not the one we've been promised: it's subtler, more complex, and ultimately far more interesting.

Most AI models are trained on massive datasets sourced (sometimes illegally) without consent or compensation to the original creator: photographers, artists, filmmakers, and writers whose work underpins these systems. While these issues lie beyond the scope of this experiment, they are critical to the broader conversation. These tools are likely here to stay, but so is the need to reckon with how they're built and who they impact.